Probable Universe – An infinite combination of alternate worlds

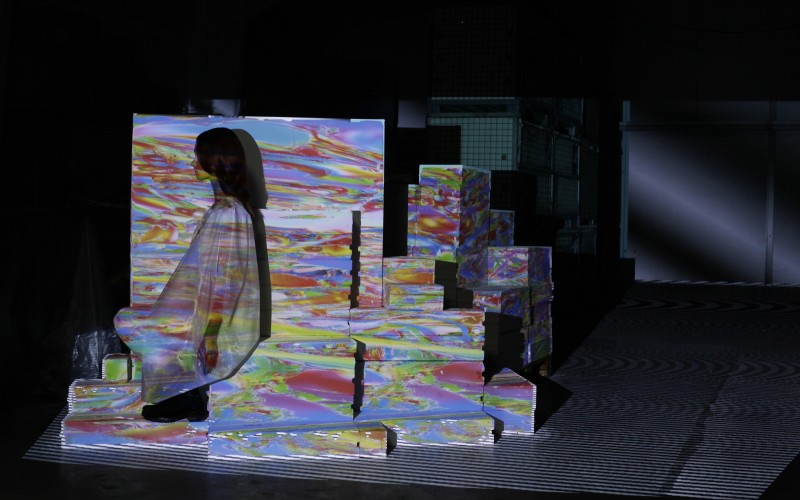

Created by convivial project (Ann-Kristin Abel and Paul Ferragut), The Probable Universe is an interactive audio-visual installation generating an infinite combination of projected worlds in a physical environment using an industrial robotic arm. The robot autonomously moves through the space, focusing its gaze on different areas and objects, revealing and superimposing content with a mapping application. The textures created are generative and allow for an infinite number of possible worlds and stories you can see within the space.

The Probable Universe is born from the insight that ‘all the matter of the universe is made up of atoms and subatomic particles that are ruled by probability and not certainty.’ (Brian Greene). The concept study has been inspired and driven by the strange and fascinating principles of quantum mechanics and string theory, a potential theory of everything. String theory predicts the existence of a multiverse, the idea that an infinite number of universes exist parallel to ours. With The Probable Universe convivial is looking to uncover the different layers and hidden dimensions of these alternate worlds.

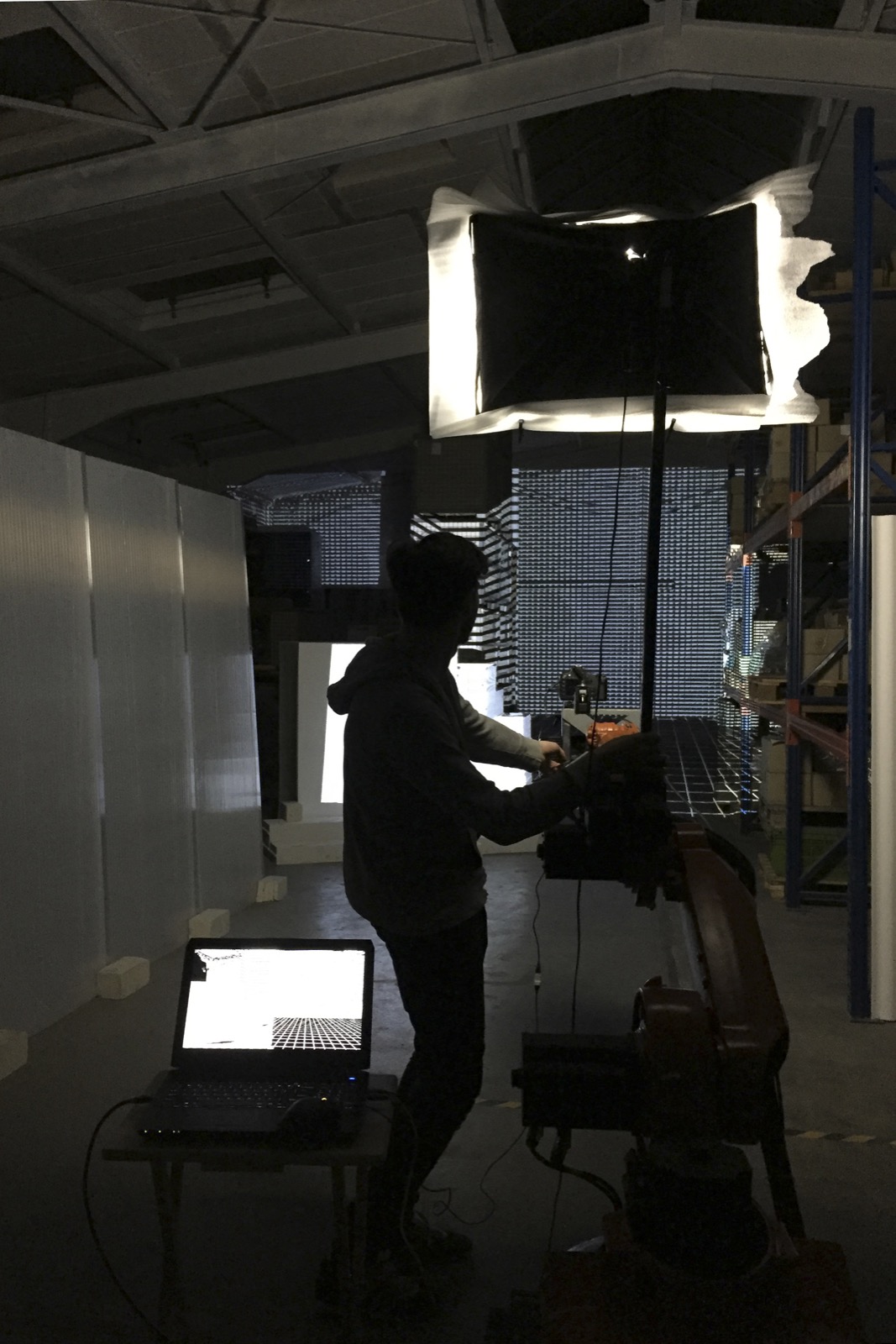

The project was made using openFrameworks and multiple add-ons from the community (such as ofxTimeline, ofxGui, ofxOsc, ofxPCL), some shaders are remixed from shadertoy, PCL. The robotic arm is a Kuka KR 6/2 with a windows 95 KRC2 controller, generously lent to the team by Dipl.-Ing. Siegfried Müller Druckgießerei in Velbert. The communication with the robot and the old controller has been possible through openShowvar, an application made by Massimiliano Fago. After arranging the space and adding the set elements, the space has been scanned with a Faro focus 3d laser scanner. The team have then turned the point cloud from the scan into a mesh using meshlab. A model was filmed with multiple Kinect sensors in order to replay the model point butt in the space using projection. Visual effects (mostly shaders) were then wrapped as texture on the virtual mesh, the projection is acting as a camera with its position b

eing updated according to the robot’s motion and position. See making of video below for more.